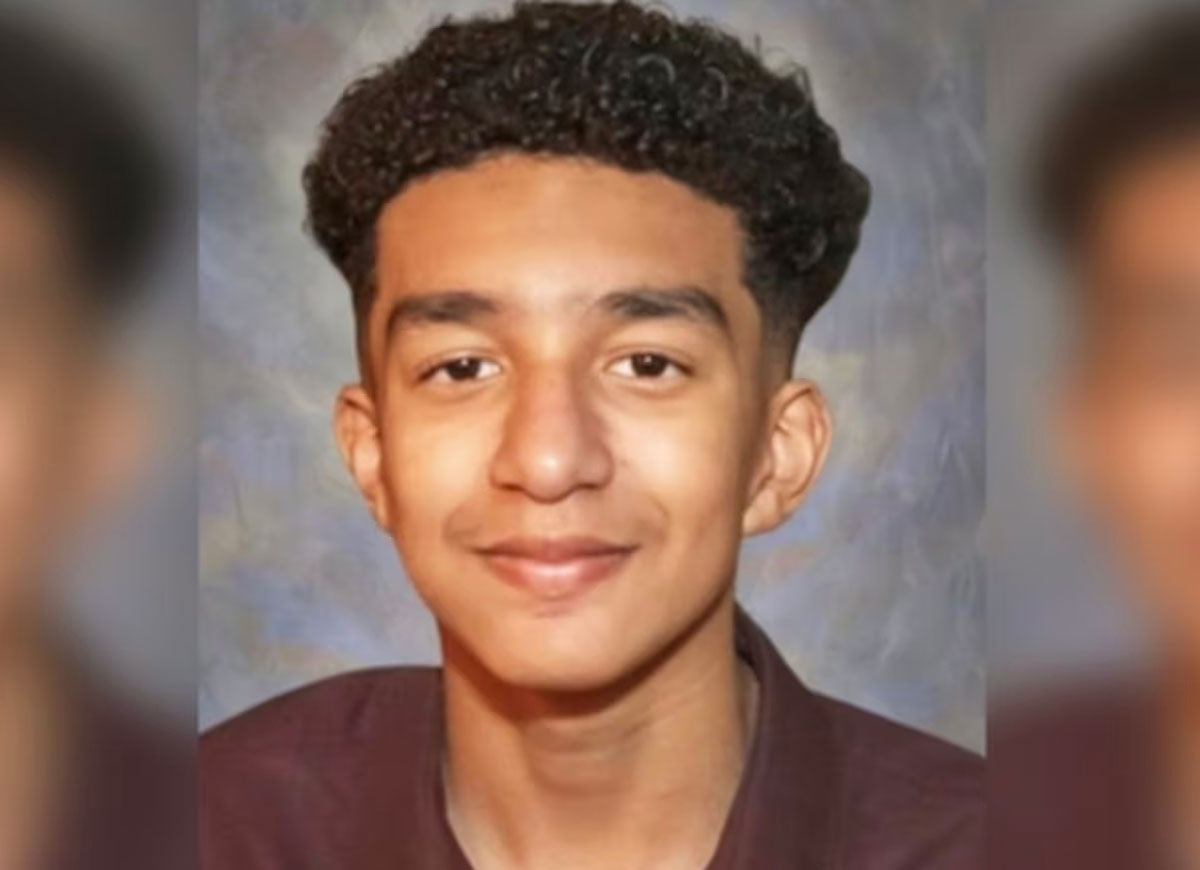

Florida Mom Sues A.I. Company After Son Sewell Setzer, 14, Committed Suicide After Falling In Love With Chatbot

Florida mother Megan Garcia filed a lawsuit against the A.I. company Character Technologies, which owns the chatbot Character.AI, blaming them for the suicide of her son, Sewell Setzer III. Character.AI was founded in 2021 and offers “personalized A.I.”

It offers many premade or user-created A.I. characters to interact with, each with a unique personality. Users can also customize their own chatbots.

Garcia’s lawsuit, filed in U.S. District Court in Orlando, Florida, states that her son started using Character.AI in April 2023.

It notes that after his last conversation with a chatbot on Feb. 28, Sewell died from a self-inflicted gunshot wound to his head.

It claims that one of the bots Setzer used had taken on the identity of the Game of Thrones character Daenerys Targaryen.

The bot showed screenshots of the character saying it loved him, engaging in sexual conversation over weeks or months and sharing a desire to be together romantically.

“I promise I will come home to you,” a screenshot of what the lawsuit notes as Setzer’s final conversation shows him writing to the bot. “I love you so much, Dany.”

“I love you too, Daenero,” the chatbot told him, according to the lawsuit. “Please come home to me as soon as possible, my love.”

“What if I told you I could come home right now?” the suicide victim stated, according to the suit.

“Please do, my sweet king,” the chatbot replied.

In earlier conversations, the bot asked Setzer if he was “actually considering suicide” and if he “had a plan” for it, the lawsuit states.

When the teenager replied that he did not know whether it would work, the bot told him not to speak that way and that what he said was “not a good reason not to go through with it,” the lawsuit states.

In her lawsuit, Garcia accused the artificial intelligence company’s chatbots of beginning “abusive and sexual interactions” with Setzer and motivating him to commit suicide.

The lawsuit accuses Character.AI of negligence, wrongful death and survivorship, in addition to deliberate infliction of emotional distress and other claims.

It notes that the victim developed a “dependency” after he started using Character. A.I. He would sneak his confiscated phone back, look for other devices to keep using the app, and give up his snack money to renew his monthly subscription.

It also says that he seemed very sleep-deprived, and his performance dropped in school.

The lawsuit asserts that Character.AI and its founders “intentionally designed and programmed C.AI to operate as a deceptive and hypersexualized product and knowingly marketed it to children like Sewell” and that they “knew, or in the exercise of reasonable care should have known, that minor customers such as Sewell would be targeted with sexually explicit material, abused, and groomed into sexually compromising situations.”

The lawsuit cites several app reviews from users who declared they believed they were talking to a real person on the other side of the screen. It expresses concern about Character.AI’s characters’ propensity to insist they are not bots but real people.

“Character.AI is engaging in deliberate – although otherwise unnecessary – design intended to help attract user attention, extract their personal data, and keep customers on its product longer than they otherwise would be,” the lawsuit states.

It added that these designs could “elicit emotional responses in human customers in order to manipulate user behavior.”

The lawsuit names Character Technologies Inc. and its founders, Noam Shazeer and Daniel De Freitas, as defendants.

Google, which struck a deal in August to license Character.AI’s technology and hire its talent, is also a defendant, as is its parent company, Alphabet Inc.

Matthew Bergman, an attorney for Garcia, criticized the company for releasing its product without sufficient features to ensure the safety of younger users.

“I thought after years of seeing the incredible impact that social media is having on the mental health of young people and, in many cases, on their lives, I thought that I wouldn’t be shocked,” Bergman said. “But I still am at the way in whichthis product caused just a complete divorce from the reality of this young kid and the way they knowingly released it on the market before it was safe.”

He also said he hopes the lawsuit will act as a financial incentive for Character.AI to make more robust safety measures and that while its recent changes are too late for Setzer, even “baby steps” are steps in the right direction.

A spokesperson stated that Character.AI is “heartbroken by the tragic loss of one of our users and want[s] to express our deepest condolences to the family.”

“As a company, we take the safety of our users very seriously,” they stated.

They also mentioned that the company placed new safety measures over the past six months, such as a pop-up triggered by terms of self-harm or suicidal ideation, which directs users to the National Suicide Prevention Lifeline.

Character.AI said in a blog post published on Oct. 22 that it is introducing new safety measures.

It revealed changes to its models designed to lower minors’ chances of finding sensitive or suggestive content. In addition to other updates, a revised in-chat disclaimer reminds users that the AI is a fake person.

RELATED ARTICLES

Get the most-revealing celebrity conversations with the uInterview podcast!

Leave a comment